Weekly Zeitgeister: Supabase Security Issues & Vibe Coding Making It Worse!

Plus: Spotify’s 300TB data scrape, FCC’s move to ground foreign drones, and Waymo’s failures exposed during SF blackout — Powered by Zeitgeister

What is Weekly Zeitgeist?

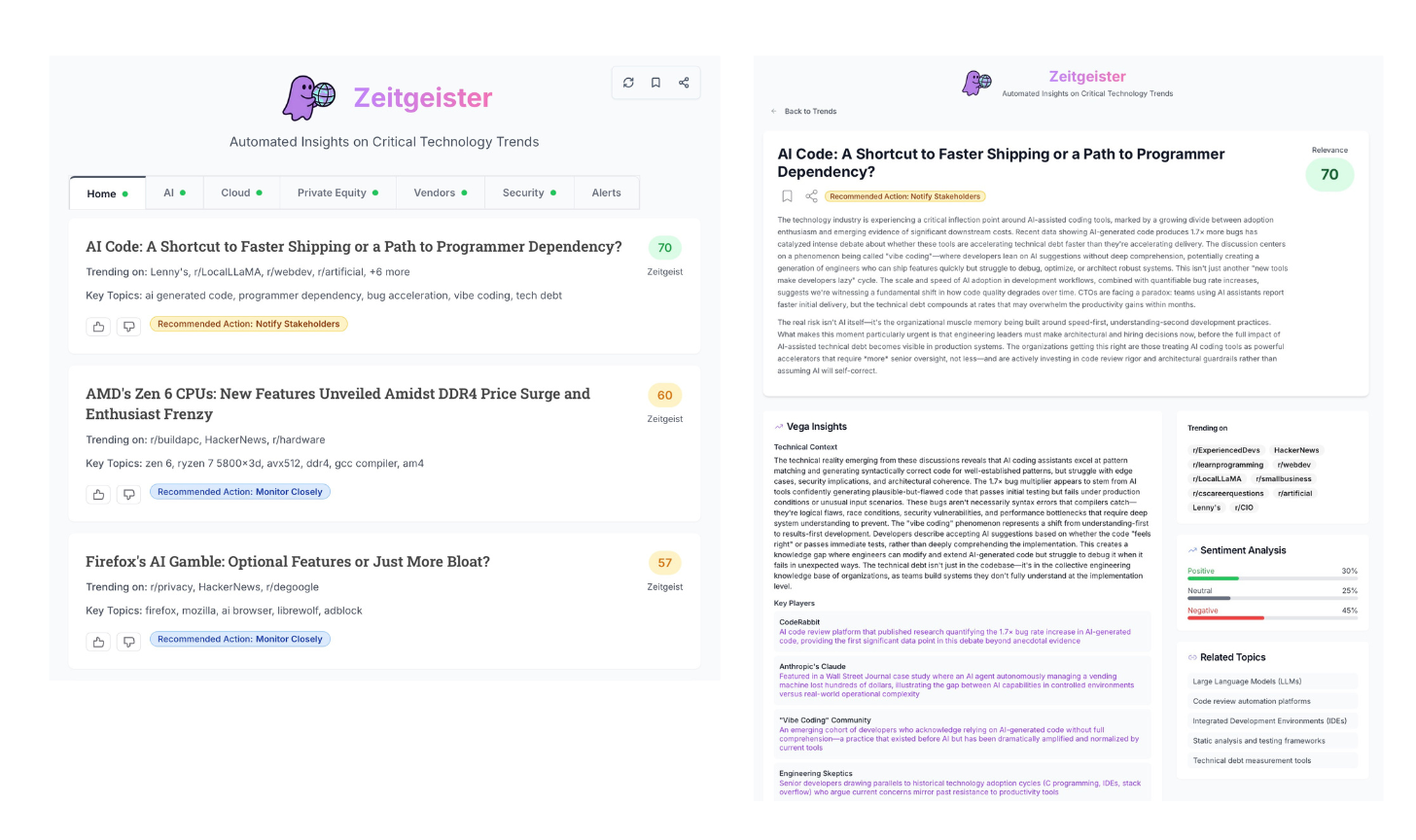

Weekly Zeitgeist is a new series powered by Zeitgeister, a tool I built to track what’s actually moving in tech, rank top stories, and turn them into usable insight. Zeitgeister crawls real technical conversations from across the web (forums, blogs, etc.), surfaces the most relevant trends, and generates context, risks/opportunities, and stakeholder-ready talking points.

Each week, I pull one high-signal headline per day from Zeitgeister, attach a brief summary, and then share why it matters if you’re building or running software at scale.

Vol III. Infrastructure Failures, Platform Exploits, and the Velocity-Security Gap

[Week of December 22, 2025]

This Week at a Glance

Monday’s Headline: Flock: AI Cameras Exposed Online—A Surveillance Nightmare Unfolds

Tuesday’s Headline: Waymo’s Robotaxis Hit a Snag: Traffic Chaos in San Francisco as Blackout Leaves Self-Driving Cars Stranded

Wednesday’s Headline: FCC Ban Grounds Foreign Drones, Shakes Up U.S. Market

Thursday’s Headline: Spotify: 300TB Data Scrape Exposes Streaming Security Gaps and Fuels Shadow Libraries

Friday’s Headline: Supabase: Ignoring RLS Warnings? Your Data Might Be Public—Read the Fine Print

Monday – Flock: AI Cameras Exposed Online—A Surveillance Nightmare Unfolds

Sentiment Analysis: 10% Positive | 70% Negative | 20% Neutral

What happened

Flock - a major AI-powered surveillance provider serving 5,000+ law enforcement agencies - suffered a security breach exposing live camera feeds to the internet without authentication.

Investigators successfully accessed and tracked themselves through Flock’s network, demonstrating how the company’s Condor pan-tilt-zoom cameras were left vulnerable without proper security controls.

The breach reveals fundamental failures in IoT security architecture for surveillance-as-a-service, where AI-powered tracking systems are deployed without adequate security oversight or defense-in-depth strategies.

Why it matters

Surveillance systems are now critical infrastructure risk: Exposed feeds can reveal sensitive location, movement, and operational patterns at scale.

Vendor mistakes become customer liability: Cities, agencies, and communities deploying these tools inherit reputational and legal exposure.

Regulatory scrutiny will rise fast: Public safety + AI surveillance failures invite audits, new controls, and procurement pressure.

Actionable Insights

Audit all surveillance and IoT endpoints: Verify authentication, network isolation, encryption, and access logging—prioritize internet-facing systems with sensitive data.

Raise vendor security requirements before deployment: Demand secure-by-default configs, third-party penetration testing, and documented incident response commitments.

Implement surveillance data governance: Define collection scope, access controls, retention policies, and breach notification procedures.

Boardroom Talking Point

“The Flock breach demonstrates that surveillance vendors are part of our security perimeter—we should assume misconfigurations exist at scale and tighten vendor audits, security requirements, and data governance before regulators or attackers force action.”

Tuesday – Waymo’s Robotaxis Hit a Snag: Traffic Chaos in San Francisco as Blackout Leaves Self-Driving Cars Stranded

Sentiment Analysis: 15% Positive | 55% Negative | 30% Neutral

What happened

Blackout-triggered failure mode: A San Francisco power outage took out key infrastructure (signals/traffic systems), and Waymo vehicles ended up stranded at intersections instead of navigating the “signals down” scenario gracefully.

Fleet-wide operational halt: The incident forced Waymo to pause service citywide, turning an external infrastructure failure into a full service interruption.

Readiness gap exposed: This wasn’t “bad driving”—it surfaced an autonomy boundary where the system appears optimized for normal conditions, but brittle when the environment fails systemically.

Why it matters

Resilience is the product: In real-world deployments, the defining trait isn’t peak autonomy—it’s how safely the system degrades when dependencies fail.

Regulatory + trust headwinds: High-visibility urban failures become fuel for regulators and municipalities to slow permits, tighten requirements, or constrain expansion.

Not just an AV problem: Any AI system relying on external inputs (infrastructure, vendors, data feeds) needs explicit “degraded mode” behavior—or it will fail correctly in the most damaging way.

Actionable Insights

Map dependency failure modes: Inventory the external infrastructure/data your system assumes, then define what “safe behavior” looks like when each goes missing/incorrect/partial.

Simulate infrastructure collapse: Add outage scenarios (missing signals, degraded sensors, upstream vendor failures) to testing and incident drills—not just component-level faults.

Define + communicate operational boundaries: Be explicit internally (and externally where needed) about the conditions under which the system is designed to operate, and what the fallback is when those conditions break.

Boardroom Talking Point

“Waymo’s blackout incident demonstrates that real-world AI is judged on failure mode, not best-case performance. We should treat dependency-outage scenarios as first-class requirements—with graceful degradation and clear operational boundaries—before external failures become service interruptions for us.”

Wednesday – FCC Ban Grounds Foreign Drones, Shakes Up U.S. Market

Sentiment Analysis: 33% Positive | 33% Negative | 34% Neutral

What happened

FCC blocks foreign drone approvals: The FCC moved to block equipment authorization for new foreign-made drones and critical components, with DJI (dominant share) as the primary target.

Existing fleets enter a sunset scenario: Current drones may remain legal, but refresh/expansion paths get constrained—forcing multi-year transition planning most orgs haven’t budgeted for.

National security drives procurement: Concerns about data transmission, remote access, and critical infrastructure monitoring are now directly shaping what hardware can be deployed.

Why it matters

Forced technology migration risk: This is a roadmap + capital planning event, not a policy headline—fleets, vendors, and support plans get disrupted.

Capability gaps during transition: Domestic alternatives may lag on performance and cost, creating operational risk while orgs retool procurement and workflows.

Decoupling spreads to more categories: This is part of a broader pattern of restricting Chinese tech across critical infrastructure domains—expect “next categories” to follow.

Actionable Insights

Fleet inventory + replacement plan: Catalog models, mission criticality, expected lifespan, and support dependencies—prioritize replacements by operational risk, not blanket swaps.

Vendor diversification pilots: Start hands-on pilots with compliant domestic alternatives now, before demand spikes and lead times stretch.

Use-case segmentation: Separate mission-critical operations (higher performance needs) from commodity use cases where compliant options exist today.

Boardroom Talking Point

“This is effectively a forced migration: our current drones may keep flying, but the refresh path just changed. We need a fleet inventory, a transition plan, and vendor diversification now—before compliance and supply constraints turn it into a fire drill.”

Thursday – Spotify: 300TB Data Scrape Exposes Streaming Security Gaps and Fuels Shadow Libraries

Sentiment Analysis: 15% Positive | 60% Negative | 25% Neutral

What happened

300TB catalog scraped: A shadow-library org (Anna’s Archive) claims it systematically extracted and archived ~300TB of Spotify’s music catalog.

DRM protections bypassed at scale: The story isn’t “piracy” — it’s the implied ability to automate extraction across hundreds of TB, suggesting DRM and monitoring didn’t stop (or detect) large-scale abuse.

Shadow libraries targeting commercial platforms: This represents an escalation from books/papers into commercial entertainment—indicating better resourcing and more sophisticated operations.

Why it matters

Content protection is not just crypto: Streaming content must be decrypted to play — meaning attackers can target the playback/extraction layer, not just “break encryption.”

Detection blind spots become existential at scale: If 300TB can move without tripping alarms, your thresholds are likely tuned for individual abuse, not distributed/systematic extraction.

This generalizes beyond music: Any subscription/content platform (video, news, e-learning, SaaS data exports) should assume similar extraction patterns can be attempted against them.

Actionable Insights

Extraction detection: Look for large, coordinated downloads that stay under your alarms by spreading traffic across many accounts, IPs, and sessions.

DRM reality check: Make sure your protections hold up against determined, well-resourced actors—not casual pirates.

Behavioral detection: Flag “organized scraping” by spotting many accounts behaving like one actor across sessions and devices.

Response readiness: Predefine detect → throttle → contain → communicate before you’re stuck saying “we’re investigating.”

Threat model update: Assume shadow libraries are patient and disciplined, not just opportunistic or profit-driven.

Boardroom Talking Point

“If a shadow library can extract ~300TB from a platform as mature as Spotify, it’s a signal our content/data defenses can’t rely on DRM alone — we need extraction detection, behavioral analytics, and an incident playbook for systematic scraping before we become the next case study.”

Friday – Supabase: Ignoring RLS Warnings? Your Data Might Be Public—Read the Fine Print

Sentiment Analysis: 15% Positive | 60% Negative | 25% Neutral

What happened

RLS misconfiguration at scale: Developers are disabling Supabase’s Row Level Security or failing to configure explicit policies, exposing databases to public access through auto-generated APIs.

Default ≠ secure: Supabase enables RLS by default, but without explicit policies even “RLS-enabled” tables remain publicly queryable.

Developer behavior, not platform flaw: The issue stems from “vibe coding” approaches treating security as friction, amplified by Supabase’s popularity for rapid prototyping.

Why it matters

Auto-generated APIs amplify misconfiguration: Supabase exposes databases directly to clients—when RLS is off or wrong, tables become publicly queryable.

Security debt compounds exponentially: Shortcuts taken early become exponentially harder to remediate as schemas, permissions, and user bases grow.

BaaS lowers all barriers: Backend-as-a-service platforms reduce friction for both rapid development and catastrophic misconfigurations.

Actionable Insights

Audit all Supabase deployments: Verify RLS is enabled with explicit, tested policies for every sensitive table—treat misconfiguration as critical.

Add mandatory security gates: Implement CI/CD checks blocking deployments without documented RLS policies.

Require platform-specific training: Developers need education on how Supabase/Firebase handle security differently than traditional backends.

Boardroom Talking Point

“Supabase misconfiguration is exposing customer data when developers disable or misapply Row Level Security. We should audit our Supabase footprint and enforce automated guardrails—development shortcuts create security debt that compounds as systems scale.”

There you have it: five days, five headlines—each with a breakdown of what happened, why it matters for tech leaders, what to do next, and what to say to show stakeholders you’re aware and prepared for the future.

Back with another Weekly Zeitgeist next week.

Enjoy your weekend!

If you’d rather see trends personalized to you — mapped, explained, and ranked to your domains, your vendors, and your board conversations— try Zeitgeister.

It’s free, and you’ll get:

🧠 Agnostic trend feed across Reddit, HN, news, and more

📊 Synthesized briefings with context, risks, and opportunities

🗣️ Stakeholder-ready talking points for CEOs, boards, and PE partners

⏱️ Saves me a couple of hours a week on “what’s going on and why do I care?”

Really sharp synthesis of the week's infra failures. The Spotify case is wild because it shows that 300TB can leave without tripping alarms when it's spread across accounts and sessions. Back when I was working on anomoly detection for an e-learning platform, we had similar blind spots where individual user behavior looked normal but coordinated extraction at scale was completely invisible to us. The actionable insight about behavioral detection for "organized scraping" is spot-on and probably applies to most subscription platforms right now.