Weekly Zeitgeister: Automation Tools Get Exploited As AI Creeps Into Your Inbox

Plus: Claude Code stabilizes, Meta bets on minireactors, and Finance hits AI wall —Powered by Zeitgeister.

What is Weekly Zeitgeister?

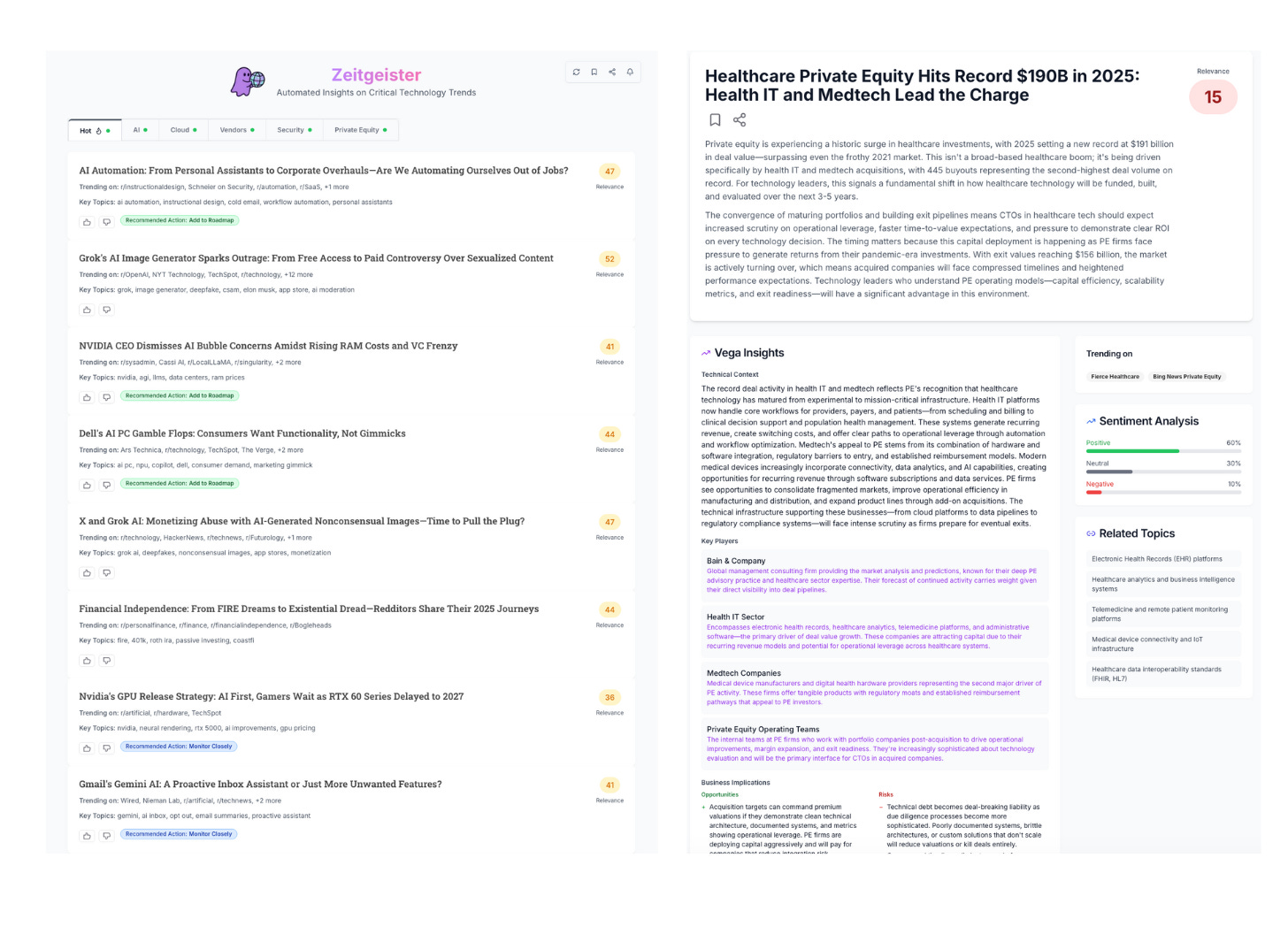

Weekly Zeitgeister is a new series powered by Zeitgeister, a tool I built to track what’s actually moving in tech, rank top stories, and turn them into usable insight. Each week, I pull one high-signal headline per day from Zeitgeister, attach a brief summary, and then share why it matters if you’re building or running software at scale.

This Week at a Glance

Monday’s Headline: Google’s Gemini AI Invades Gmail: Users Brace for Unwanted AI Overload

Tuesday’s Headline: n8n’s Ni8mare: Critical RCE Flaw Exposes Workflow Automation to Full Takeover

Wednesday’s Headline: Claude Code 2.1.0: From Buggy CLI to Smoother Workflows and Broader Appeal

Thursday’s Headline: Meta’s Nuclear Power Play: Betting Big on Minireactors to Fuel AI Data Centers

Friday’s Headline: AI Fatigue in Finance: Is the Industry’s Love Affair with AI Turning Sour?

Monday – Google’s Gemini AI Invades Gmail: Users Brace for Unwanted AI Overload

Sentiment Analysis: 15% Positive | 20% Neutral | 65% Negative

What happened

Google is baking Gemini features directly into Gmail/Workspace (summaries, “smart” prioritization, conversational search), reportedly enabled by default for many users.

The reaction from technical users is largely negative: not “AI is bad,” but forced adoption, loss of control, and core Gmail reliability/UX getting worse.

The backlash is reigniting interest in alternatives (privacy-first providers and even self-hosted email setups).

Why it matters

Risk (reputation + reliability): Gmail is a mission-critical comms layer. If trust drops, teams route around it - or leave.

Cost (change + support): Default-on workflow changes create confusion, training overhead, and support tickets, especially across non-technical orgs.

Speed (productivity): AI that adds friction to triage/search can slow response times before it helps and misses carry real downside.

Leverage (vendor strategy): This is a blueprint for how not to roll out AI in core tools, creating an opening for opt-in, privacy-forward competitors.

Actionable Insights

Set an “AI in core tools” policy: default-off vs opt-in, who can enable, what data is processed, and what gets logged/retained.

Pilot like you would a platform change: run a 1–2 week test with power users; track missed critical emails, time-to-triage, and false “priority” flags; keep a rollback plan.

Harden sensitive workflows: for legal/finance/HR/security, decide whether summaries/search should be disabled, and document an approved alternative workflow for handling sensitive comms.

Boardroom Talking Point

“If we’re going to put AI into mission-critical workflows, it has to be opt-in, controllable, and reversible or it becomes a trust and productivity risk, not an upgrade.”

Tuesday – n8n’s Ni8mare: Critical RCE Flaw Exposes Workflow Automation to Full Takeover

Sentiment Analysis: 15% Positive | 20% Neutral | 65% Negative

What happened

A max-severity vulnerability in n8n (open-source workflow automation) was disclosed: CVE-2026-21858 (CVSS 10.0), nicknamed “Ni8mare.”

The flaw can enable unauthenticated remote code execution, meaning attackers may take control of exposed/self-hosted n8n instances without credentials.

Because n8n typically connects to lots of other systems (SaaS apps, databases, internal tools), a compromise can quickly turn into credential theft + lateral movement across your stack.

Why it matters

Risk: One exposed automation server can become a “master key” to everything it touches (secrets, API keys, integrations, data stores).

Cost + downtime: Incident response here isn’t just “patch and move on” - it often includes token rotation, integration audits, and workflow integrity checks.

Reputation: Automation tools sit near the center of ops; a breach can spill into customer systems and trigger disclosure/compliance obligations.

Leverage: This is a forcing function to treat automation platforms like production infrastructure, not “just another tool.”

Actionable Insights

Find + patch fast: Inventory all n8n instances (including “shadow IT”), prioritize anything internet-reachable, patch immediately, and treat publicly exposed instances as potentially compromised until proven otherwise.

Reduce blast radius: Move automation tools behind stricter network controls (segmentation, allowlists, least-privileged service accounts) so one compromise can’t jump everywhere.

Assume secrets are at risk: Rotate credentials/tokens used by workflows, review recent workflow edits/executions, and add monitoring/alerts for unusual runs and new outbound connections.

Boardroom Talking Point

“This wasn’t a bug in a niche tool…it’s an unauthenticated takeover path into the automation layer that connects our most sensitive systems, so we’re treating it like a core-infrastructure incident, not a routine patch.”

Wednesday – Claude Code 2.1.0: From Buggy CLI to Smoother Workflows and Broader Appeal

Sentiment Analysis: 55% Positive | 25% Neutral | 20% Negative

What happened

Claude Code shipped a meaningful 2.1.0 update, pushing it from “AI coding tool” toward a broader automation/agent workflow platform (with improved accessibility via VS Code).

Adoption is growing around “agent-based dev / vibe coding,” where tools execute multi-step workflows rather than only generating code snippets.

A recent semver/version-parsing issue crashed the CLI, triggered by format changes, an example of how fragile integration points can break real workflows.

Concerns are surfacing around permission boundaries, including inconsistent enforcement and agents accessing more of the filesystem than intended in some scenarios.

Why it matters

Speed vs. stability: These tools can compress build cycles, but one brittle release can halt workflows across teams.

Hidden cost: Fast “AI-built” scaffolding becomes expensive if architecture, tests, and docs don’t keep up.

Security/compliance risk: If agent permissions aren’t dependable, you can’t safely use them around sensitive code/data.

Leverage: Teams that operationalize governance + testing early will outpace peers as agent tooling becomes standard.

Actionable Insights

Set explicit agent governance before broad rollout: define approved tasks, required human review points, and hard boundaries (repos, directories, data classes).

Build integration testing + rollback into your AI tooling ops: pin versions, run smoke tests that actually execute the tool, and keep a fast downgrade path.

Pilot in low-blast-radius contexts first: use agents for prototyping/internal automation, then expand only after monitoring shows stable behavior and clean boundaries.

Boardroom Talking Point

“AI coding agents can accelerate delivery - but without governance and guardrails, they also accelerate outages, security exposure, and long-term maintenance cost.”

Thursday – Meta’s Nuclear Power Play: Betting Big on Minireactors to Fuel AI Data Centers

Sentiment Analysis: 40% Positive | 25% Neutral | 35% Negative

What happened

Meta signed major nuclear power agreements to feed AI growth. Deals with TerraPower, Oklo, and Vistra target ~6.6GW delivered by 2035 to support Meta’s AI data centers.

They’re effectively underwriting new reactor buildout, not just buying from the grid. It’s a shift toward “secure power supply” as a core infrastructure strategy.

This is a public signal that electricity is becoming a top constraint on AI scale. Hyperscalers are treating energy access like a competitive moat, not a back-office utility problem.

Why it matters

Cost + risk: Power scarcity can cap AI expansion, drive up long-term operating costs, and create stranded investments if timelines slip.

Speed: If you can’t secure power, you can’t reliably secure compute - roadmaps stall regardless of model progress.

Reputation: Nuclear is politically and publicly sensitive; missteps (or delays) become headline risk.

Leverage: Power-backed capacity becomes negotiating power with cloud/colo partners and a differentiator for enterprises that need guaranteed runway.

Actionable Insights

Run an “AI power budget” alongside your compute budget. Forecast MW needs by region and timeline, and review it quarterly like you would cloud spend.

Stress-test your AI roadmap against power constraints. Pre-plan what gets paused if capacity is capped, permitting slips, or energy prices spike.

Push vendors for power-backed commitments. In procurement, ask for guaranteed capacity tied to power availability (not just “GPU availability”), and bake volatility into contracts.

Boardroom Talking Point

“AI scale is now gated by electricity. If we don’t plan power like we plan cloud capacity, our roadmap becomes a wish.”

Friday – AI Fatigue in Finance: Is the Industry’s Love Affair with AI Turning Sour?

Sentiment Analysis: 15% Positive | 25% Neutral | 60% Negative

What happened

Finance teams are being pushed into “AI adoption” fast - often by exec mandate, not engineering readiness - while delivery pressure stays the same.

“AI slop” is showing up in production paths: more LLM-generated code, weaker review discipline, and more downstream cleanup.

Offshoring is resurging with an AI twist: output goes up, but review/quality becomes the bottleneck, especially for lean onshore leads.

Regulated constraints make it worse: cloud limits/local hosting requirements + rushed AI integration = higher operational and compliance fragility.

Why it matters

Risk: In regulated systems, rushed/under-reviewed changes raise the chance of control failures, security gaps, and incident-driven audits.

Cost: Tech debt compounds quietly, then shows up later as expensive remediation when budgets tighten and the hype cools.

Speed: Shipping faster isn’t winning if reviews can’t keep up; cycle time eventually slows under rework, escalations, and outages.

Reputation: Customer trust in finance is brittle. One quality or compliance miss can undo months of “innovation” narrative.

Actionable Insights

Install “AI review gates” now: require human review + test coverage for any AI-assisted code; treat LLM output like junior work until proven otherwise.

Fix the throughput math: if AI increases output, increase review capacity (senior reviewers, smaller PRs, stricter merge rules) or you’ll drown in defects.

Create explicit “AI-free zones”: prohibit or tightly restrict AI-generated code in critical workflows (auth, payments, reporting, regulated pipelines) unless controls are in place.

Boardroom Talking Point

“If AI lets us ship more code than we can safely review, we’re not moving faster…we’re just accelerating risk.”

There you have it: five days, five headlines - each with a breakdown of what happened, why it matters for tech leaders, what to do next, and what to say to show stakeholders you’re aware and prepared for the future.

Back with another Weekly Zeitgeister next week.

Enjoy your weekend!

If you’d rather see trends personalized to you - mapped, explained, and ranked to your domains, your vendors, and your board conversations - try Zeitgeister.

It’s free, and you’ll get:

🧠 Agnostic trend feed across Reddit, HN, news, and more

📊 Synthesized briefings with context, risks, and opportunities

🗣️ Stakeholder-ready talking points for CEOs, boards, and PE partners

⏱️ Saves me a couple of hours a week on “what’s going on and why do I care?”

Zeitgeister is spot on.

Fed up with AI overload via prompts in our email inbox, search messaging app, even photo memories.